AI

OpenAI's Newly Released GPT-4o Mini Dominates the Chatbot Arena. Here's Why.

26 July 2024

|

Zaker Adham

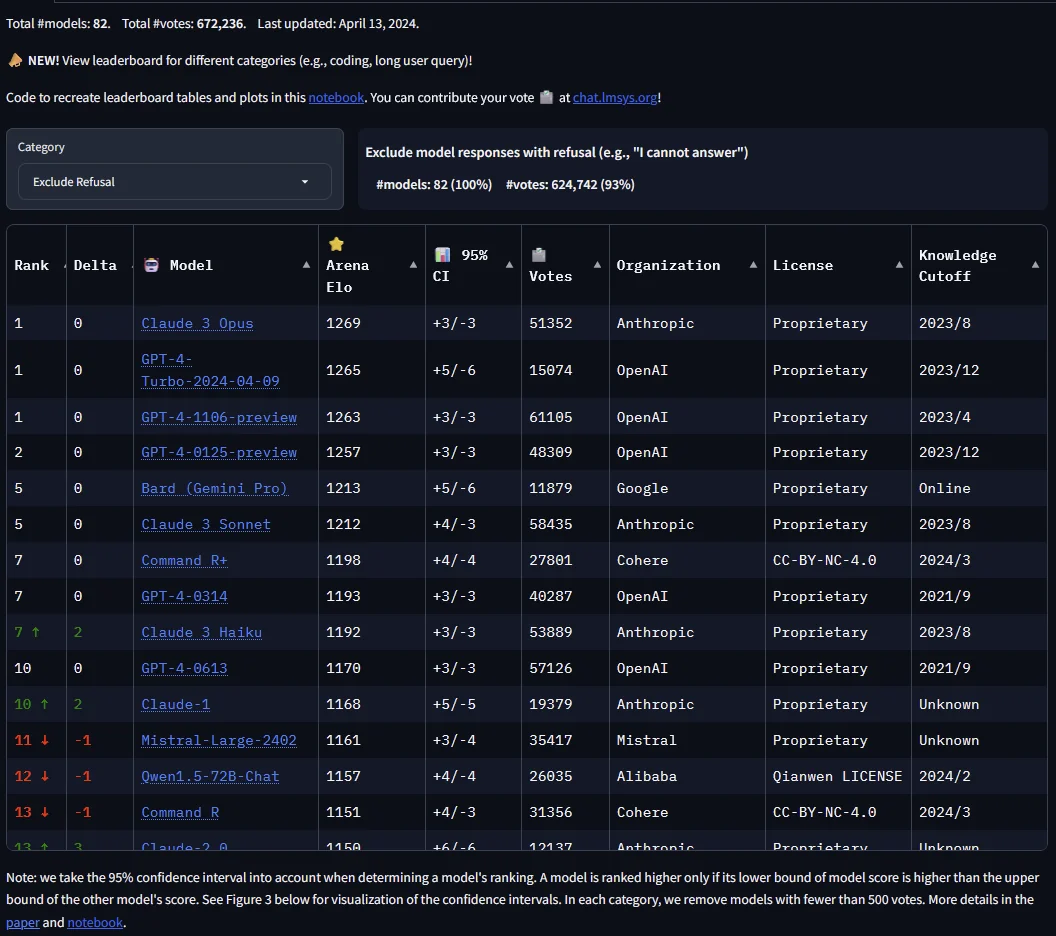

OpenAI’s latest model, GPT-4o Mini, has taken the AI community by storm. Just a week after its release, this compact and cost-effective version of GPT-4o has rapidly ascended the leaderboard in the Large Model Systems Organization (LMSYS) Chatbot Arena, outperforming well-established models like Claude 3.5 Sonnet and Gemini Advanced.

The LMSYS Chatbot Arena is a unique platform where users can test and compare large language models (LLMs) by interacting with them side by side. Users vote on their preferred responses without knowing which models they are evaluating. Since its debut, GPT-4o Mini has quickly risen to prominence, ranking just behind its predecessor, GPT-4o. This is particularly impressive given that GPT-4o Mini is twenty times cheaper.

The swift rise of GPT-4o Mini has sparked discussions on social media, with some users questioning how a new model could outperform more established ones like Claude 3.5 Sonnet. Addressing these concerns, LMSYS explained on their X account that the Chatbot Arena rankings are based on human preferences and votes.

To gain a better understanding of each model's capabilities, LMSYS recommends examining the per-category breakdowns available on their platform. By selecting different categories such as coding, hard prompts, and longer queries, users can see where each model excels. For instance, in the coding category, GPT-4o Mini ranks third behind GPT-4o and Claude 3.5 Sonnet. However, it claims the top spot in categories like multi-turn conversations and longer queries (500 tokens or more).

If you’re eager to experience GPT-4o Mini yourself, you can log into your OpenAI account on the ChatGPT site. Alternatively, you can participate in the Chatbot Arena and potentially encounter GPT-4o Mini by visiting the LMSYS website, clicking on Arena side-by-side, and entering a sample prompt.