ChatGPT Sends Millions to Verified Election News, Blocks 250,000 Deepfake Attempts

AI

Zaker Adham

09 November 2024

04 August 2024

|

Paikan Begzad

Summary

Summary

As the demand for AI safety and accountability rises, a new report highlights significant shortcomings in current AI model evaluation tests and benchmarks.

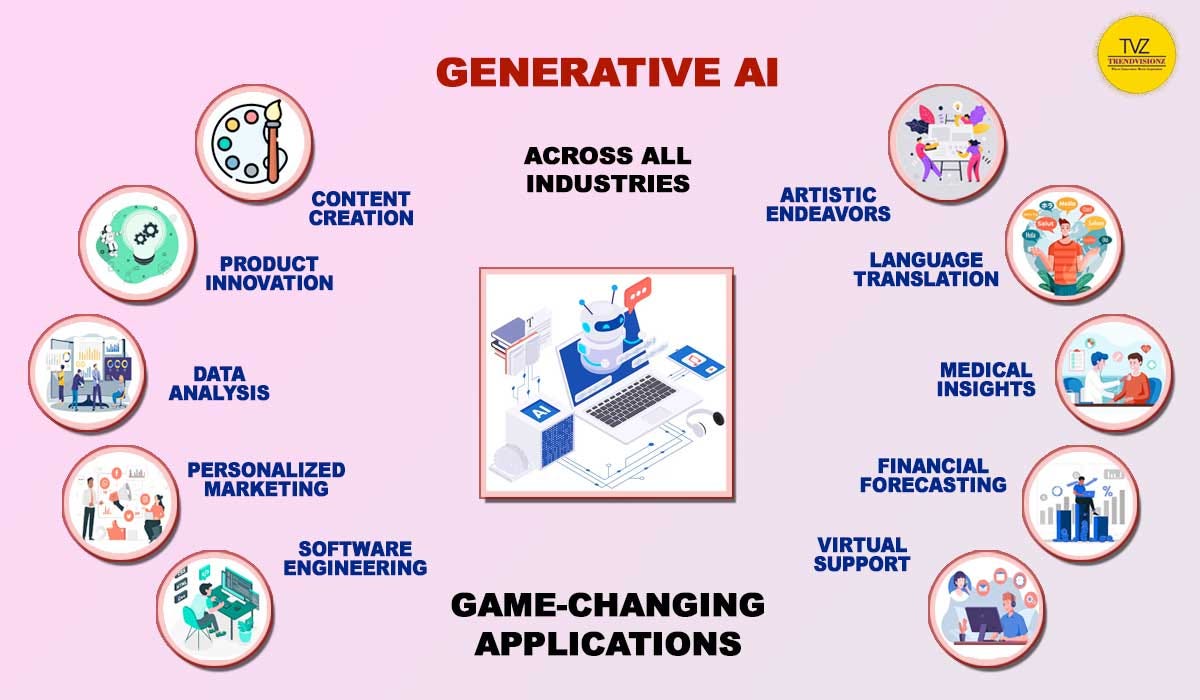

Generative AI models, capable of creating text, images, music, and videos, are under increased scrutiny for their propensity to make errors and behave unpredictably. Public sector agencies and tech firms have proposed new benchmarks to assess the safety of these models.

Last year, Scale AI launched a lab to evaluate model safety, while this month saw the release of new assessment tools from NIST and the U.K. AI Safety Institute. Despite these efforts, the Ada Lovelace Institute (ALI), a U.K.-based nonprofit AI research organization, found that existing safety evaluations are inadequate.

The ALI study, involving interviews with experts from academic labs, civil society, and AI vendors, as well as an audit of recent research, revealed that current evaluations are non-exhaustive, easily gamed, and not necessarily indicative of real-world behavior.

Elliot Jones, a senior researcher at ALI and co-author of the report, compared AI safety expectations to those of smartphones, prescription drugs, or cars, which undergo rigorous testing before deployment. However, AI models do not meet these standards. The study reviewed academic literature on AI model risks and surveyed 16 experts, including tech company employees, finding sharp disagreements on evaluation methods and taxonomy.

Some evaluations tested models only in lab settings, not accounting for real-world impacts. Others used research-focused tests for production models. Experts pointed out the problem of data contamination, where training and test data overlap, skewing results. Benchmarks are often chosen for convenience, not efficacy.

Mahi Hardalupas, an ALI researcher and study co-author, highlighted the risk of developers manipulating benchmarks and the unpredictable behavior caused by small model changes.

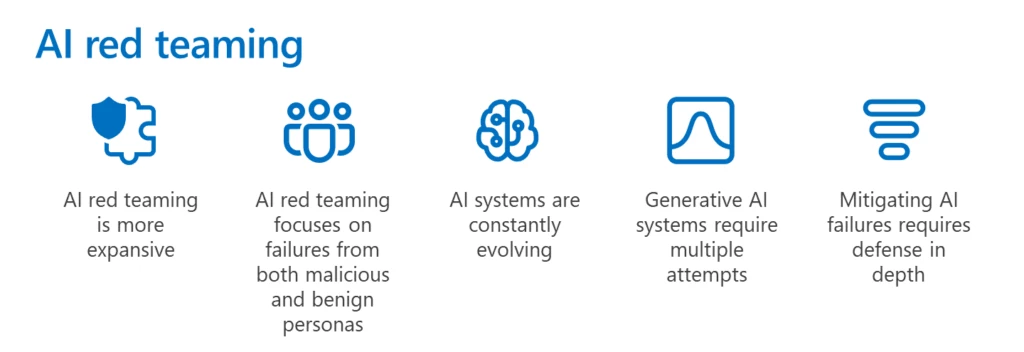

The study also criticized "red-teaming," where experts attempt to exploit model vulnerabilities. Red-teaming lacks standardization, making its effectiveness hard to measure and costly for smaller organizations.

Pressure to release models quickly and reluctance to conduct thorough evaluations exacerbate the issue. AI labs are outpacing their own and society's ability to ensure model safety and reliability.

Despite these challenges, Hardalupas sees a path forward with increased public-sector engagement. He suggests that regulators clearly articulate evaluation requirements and that the evaluation community be transparent about current limitations.

Jones advocates for context-specific evaluations considering user demographics and potential attack vectors. This approach requires investment in the underlying science to develop robust and repeatable evaluations.

Ultimately, Hardalupas asserts that "safety" is not an inherent model property but depends on usage context, accessibility, and safeguard adequacy. Evaluations can identify risks but cannot guarantee absolute safety.

AI

Zaker Adham

09 November 2024

AI

Zaker Adham

09 November 2024

AI

Zaker Adham

07 November 2024

AI

Zaker Adham

06 November 2024