Technology News

A Brief Introduction to Quantum Computing

16 July 2024

|

Zaker Adham

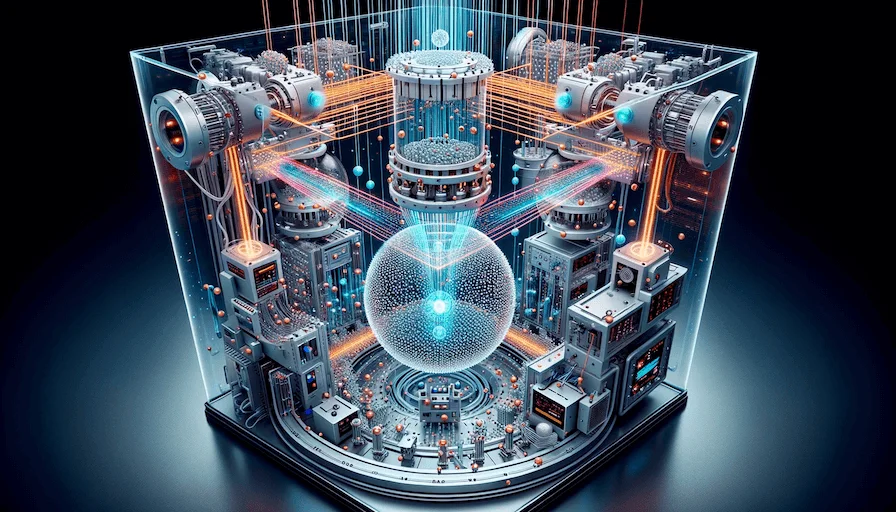

Quantum computing is poised to revolutionize technology, thanks to its unique approach that harnesses quantum phenomena such as superposition and entanglement.

Engineers and scientists have long been intrigued by the peculiar behavior of photons and other subatomic particles, leading to significant breakthroughs in various fields, including the creation of lasers, transistors, and integrated circuits. These advancements form the backbone of our modern electronics industry, all grounded in the principles of quantum theory, even if the intricate details remain elusive to many.

Quantum Computers: The Next Frontier

Quantum computers represent a significant leap forward, utilizing the inherent properties of quantum mechanics to perform data operations. Unlike classical computers, which use bits as units of information that exist as either 0 or 1, quantum computers use quantum bits, or qubits. Qubits can exist in multiple states simultaneously, allowing them to process information at unprecedented speeds and efficiencies. This ability to be in multiple states at once, a property known as superposition, enables quantum computers to perform numerous calculations simultaneously, exponentially increasing computing power.

Classical vs. Quantum Computing

The fundamental difference between classical and quantum computing lies in how they handle information. Classical computers operate using bits that can be either 0 or 1. In contrast, quantum computers use qubits, which can exist in a superposition of states, meaning they can be both 0 and 1 at the same time. This superposition allows quantum computers to solve complex problems much faster and more efficiently than classical computers.